The question "what do you use for AI agent infrastructure?" has become one of the most searched queries in the DevOps and platform engineering space. And for good reason: the global AI agent market is projected to grow from $5.1 billion in 2024 to $47.1 billion by 2030, representing a compound annual growth rate of nearly 45%. With 85% of enterprises expected to implement AI agents by the end of 2025, getting the infrastructure right has never been more critical.

Read moreSoftware quality assurance has changed dramatically over the past few years. Today, the velocity of software development demands more than traditional staging and shared QA environments. Releases are expected to be faster, integration cycles shorter, and quality standards higher. These pressures have inspired a growing interest in preview environments—ephemeral, production-like spaces spun up on demand for testing code changes in isolation. As 2025 approaches, organizations are discovering just how transformative these environments can be for QA processes and the broader software development lifecycle.

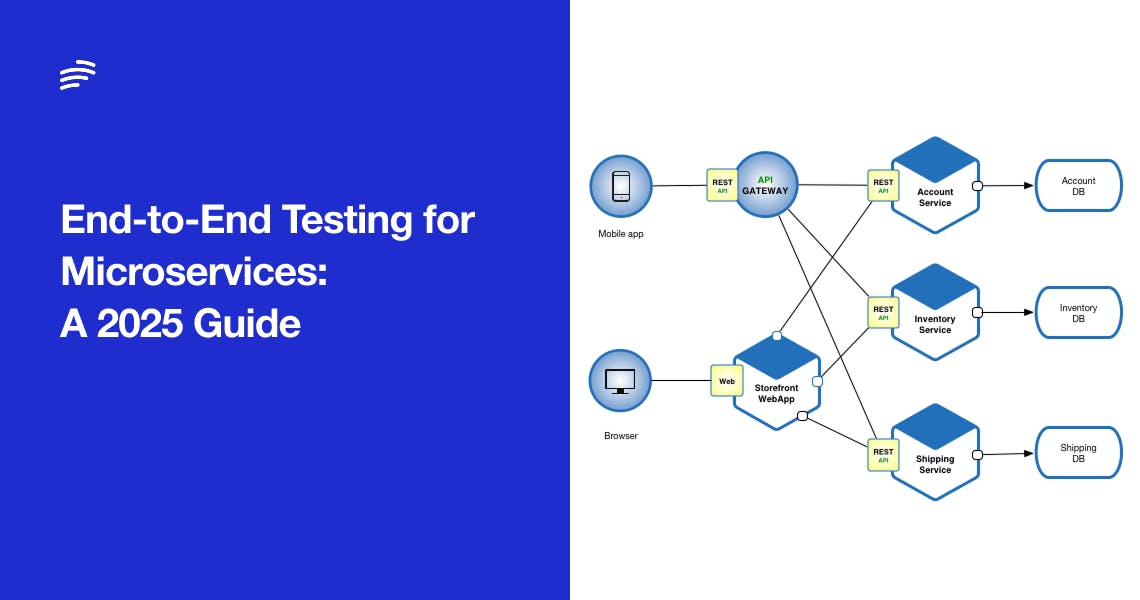

Read moreEnd-to-end testing in microservices can make or break your release velocity. This comprehensive guide explores how engineering leaders can balance quality and speed in 2025 by rethinking E2E testing strategies. From orchestrating services and taming flaky tests to leveraging full-stack preview environments (with platforms like Bunnyshell) for every pull request, we delve into best practices to ensure your distributed system works flawlessly – without grinding development to a halt.

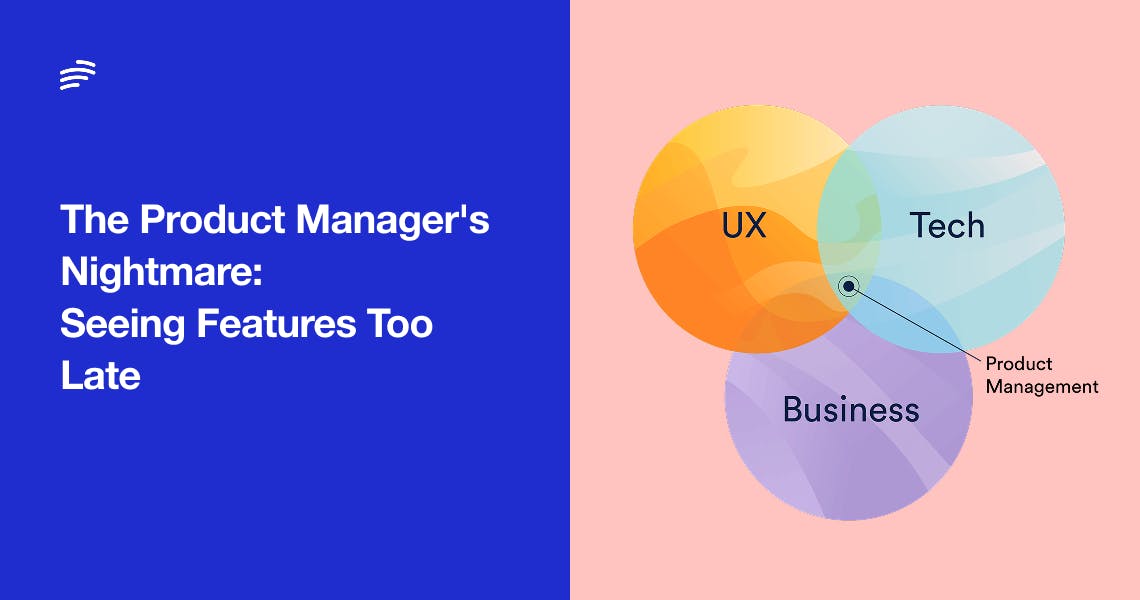

Read moreStop losing sprints to late-stage feature changes. Discover why 60% of features need significant revisions after PM review, and how forward-thinking product teams are seeing features as they're built—not weeks later in staging. Learn the real cost of delayed feedback and the solution that's cutting development time by 50%.

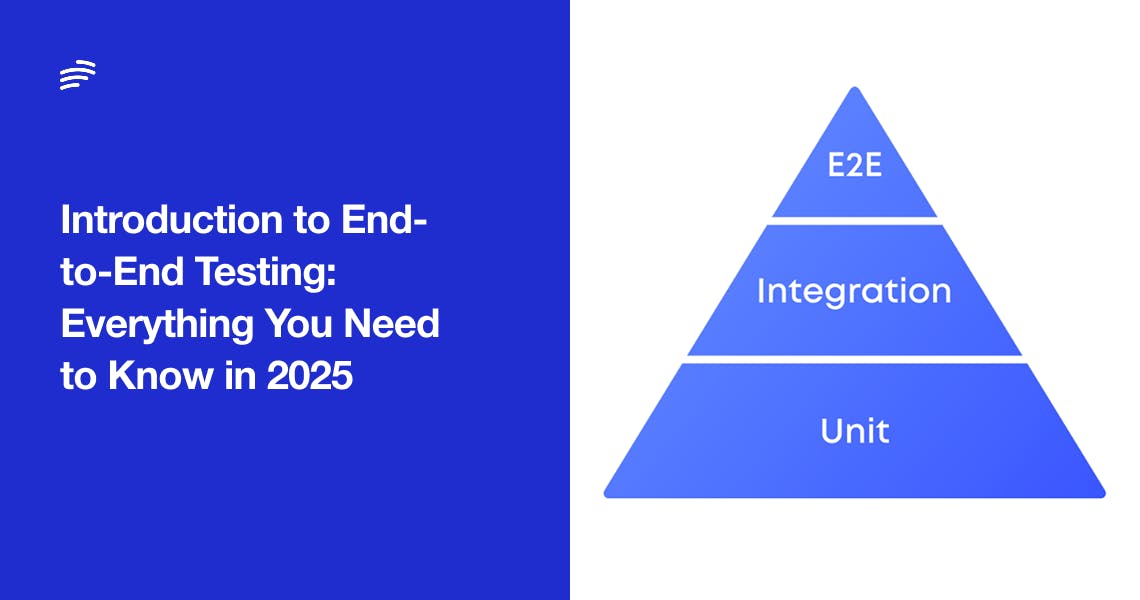

Read moreDiscover the most effective strategies for end-to-end testing in 2025. This whitepaper explores how modern engineering teams can improve software quality by shifting testing left, automating critical user flows, and using preview environments for every pull request. Ideal for CTOs and engineering managers leading fast-moving development teams.

Read moreIn today’s AI-driven world, SaaS startups can’t afford to stick to outdated development workflows. This article explores how modern teams of 5–20 developers can dramatically boost velocity by combining AI assistants like ChatGPT, code copilots like Cursor, preview environments with Bunnyshell, and smart Git workflows. Packed with practical advice and comparisons to legacy SDLC methods, it’s a must-read playbook for any team that wants to build faster without breaking things.

Read moreAt Bunnyshell, we’re building the environment layer for modern software delivery. One of the hardest problems our users face is converting arbitrary codebases into production-ready environments—especially when dealing with monoliths, microservices, ML workloads, and non-standard frameworks.

To solve this, we built MACS: a multi-agent system that automates containerization and deployment from any Git repo. With MACS, developers can go from raw source code to a live, validated environment in minutes—without writing Docker or Compose files manually.

In this post, we’ll share how we architected MACS internally, the design patterns we borrowed, and why a multi-agent approach was essential for solving this problem at scale.

Read moreLLM-as-a-Judge is a method where large language models like GPT-4 are used to automatically evaluate the outputs of other AI models, replacing slow, expensive human review and outdated metrics like BLEU or ROUGE. By prompting an LLM to assess qualities such as accuracy, helpfulness, tone, or safety, teams can get fast, scalable, and surprisingly reliable evaluations that often align closely with human judgment. This approach enables continuous quality monitoring, faster iteration, and cost-effective scaling across use cases like chatbots, code generation, summarization, and moderation.

Read more