Historically, we only run unit and integration tests in our CI pipeline. But at times, we also face issues in production that could have been caught during development had our testing environment resembled our production environment and we performed testing and deployment in that test environment.

Testing your application in a production-like environment can help you catch bugs early in the development lifecycle and increase the overall quality of the shipped product. However, setting up your CI/CD pipeline in a way that allows you to set up ephemeral production-like environments is not a trivial undertaking.

In this article, we’ll see how to use Kubernetes to create ephemeral environments that look like production in a cost-effective way.

Setup

We’ll be showcasing two approaches. The first is to have a shared Kubernetes cluster, where each ephemeral environment is a separate namespace but the underlying cluster is the same. The second is to have a separate cluster for each ephemeral environment.

The first approach is more cost-effective, while the second provides more separation between different environments if that is needed for compliance.

We’ll be using GitLab for source control management and Google Kubernetes Engine to deploy our ephemeral environments.

Prerequisites

To follow along with the code examples, you will need:

- A GitLab account (free trial)

- Google Cloud Platform (90-day free trial with $300 in free credits)

- Gcloud set-up locally or Google Cloud Shell

- Node.js web application (clone from here)

Demo Setup

First, you’ll need to set up the necessary Google Cloud resources needed for this post.

Service Account

Create a service account to use in your pipeline to interact with GCP:

gcloud iam service-accounts create ci-cd-gitlab --description="This account is used for ephemeral CI/CD example." --display-name="ci_cd_gitlab"

Next, give permissions to this account:

- Service Account User

- Artifact Registry Administrator

- Kubernetes Engine Admin

For the purpose of this post, we are using slightly higher permissions, but in the production setting, you should adjust the permissions according to your setup.

Now, you can download the JSON key for this service account; you will use this file to set up variables for your CI pipeline.

Note that the content of the JSON file is sensitive, so create a variable called GOOGLE_JSON_KEY in your GitLab pipeline and paste the content there. This will allow you to use the key without putting it in your repository.

Google Artifact Registry

Create a Docker repository in Artifact Registry, which you’ll use to push your web application Docker image. We have created a repository named ephemeraldemo, which we will use throughout this demo.

Variables for the CI Pipeline

Instead of hard-coding these variables for use in our CI pipeline, we’ll refer to them from pipeline variables.

You’ll need to create the following variables for use in subsequent steps:

- GOOGLE_KEY_JSON: The JSON key file configured above

- PROJECT_ID: GCP Project ID

- REGION: Region in which your cluster and other resources are configured, e.g., us-central1

- REPOSITORY_NAME: Name of the Artifact Repository created above

- ZONE: Availability Zone in which your cluster and other resources are configured, e.g., us-central1-c

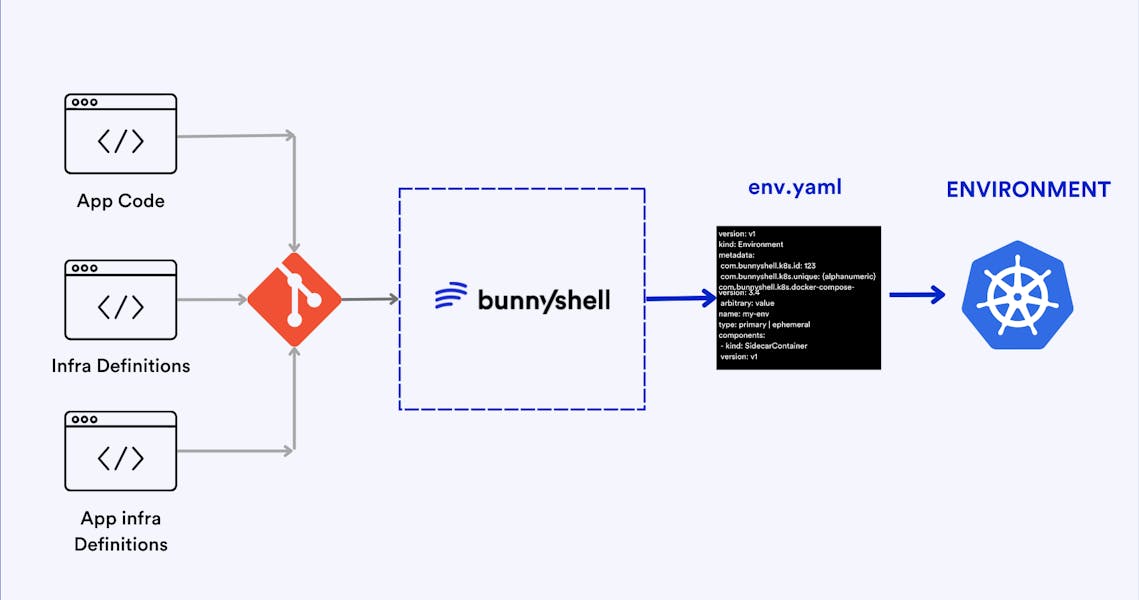

Ephemeral Env. with Bunnyshell

Ready to Automate Ephemeral Environments for your CI/CD Pipeline?

Bunnyshell automatically creates ephemeral environments on every pull request and tears it down with every merge close.

Sample Workflow

This sample workflow shows you how to achieve an ephemeral production-like test environment using Kubernetes.

First, you need to understand what the workflow will look like for both a shared cluster and an isolated cluster.

Shared Cluster Approach

- A developer creates a merge request for changes.

- The build triggers and creates a Docker image for the latest changes and pushes it to Artifact Registry by tagging it with the current branch name.

- The pipeline will create a new namespace in the shared Kubernetes cluster using the branch name.

- The application will be deployed to the namespace created in Step 3 using the Docker image built in Step1.

- The pipeline will give the address of the newly created load balancer to access the web application.

- The namespace will be cleaned up when the reviewer merges the request.

Isolated Cluster Approach

- A developer creates a merge request for changes.

- The build triggers and creates a Docker image for the latest changes and pushes it to Artifact Registry by tagging it with the current branch name.

- The pipeline creates a new Kubernetes cluster using the branch name.

- The application will be deployed to the default namespace in the newly created cluster in Step 3 using the Docker image built in Step 1.

- The pipeline will give the address of the newly created load balancer to access the web application.

- The namespace will be cleaned up when the reviewer merges the request.

Both of these workflows are captured in the following deploy_gke.sh script, which you will use in the .gitlab-ci.yml file to set up the CI pipeline.

First, let’s take a look at the content of deploy_gke.sh file:

#!/bin/bash

set -xe

CLUSTER_TYPE=$1

CLUSTER_NAME=$2

BRANCH_SLUG=$3

REGION=$4

ZONE=$5

PROJECT_ID=$6

DOCKER_IMAGE=$7

create_cluster(){

gcloud beta container --project "$PROJECT_ID" \

clusters create "$CLUSTER_NAME" \

--zone "$ZONE" \

--no-enable-basic-auth \

--cluster-version "1.22.8-gke.202" \

--release-channel "regular" \

--machine-type "g1-small" \

--image-type "COS_CONTAINERD" \

--disk-type "pd-standard" \

--disk-size "100" \

--metadata disable-legacy-endpoints=true \

--scopes "https://www.googleapis.com/auth/devstorage.read_only","https://www.googleapis.com/auth/logging.write","https://www.googleapis.com/auth/monitoring","https://www.googleapis.com/auth/servicecontrol","https://www.googleapis.com/auth/service.management.readonly","https://www.googleapis.com/auth/trace.append" \

--max-pods-per-node "110" \

--num-nodes "3" \

--logging=SYSTEM,WORKLOAD \

--monitoring=SYSTEM \

--enable-ip-alias \

--network "projects/$PROJECT_ID/global/networks/default" \

--subnetwork "projects/$PROJECT_ID/regions/us-central1/subnetworks/default" \

--no-enable-intra-node-visibility --default-max-pods-per-node "110" \

--no-enable-master-authorized-networks \

--addons HorizontalPodAutoscaling,HttpLoadBalancing,GcePersistentDiskCsiDriver \

--enable-autoupgrade \

--enable-autorepair \

--max-surge-upgrade 1 \

--max-unavailable-upgrade 0 \

--enable-shielded-nodes \

--node-locations "$ZONE"

}

create_isolated_cluster() {

echo "Checking if the cluster $CLUSTER_NAME already exists..."

cluster_exists=`gcloud container clusters list --zone $ZONE | grep $CLUSTER_NAME | wc -l`

if [ "$cluster_exists" -eq 0 ]

then

echo "Cluster doesn't exist. Creating the cluster"

create_cluster

else

echo "Cluster already exists"

fi

}

fetch_cluster_credentials(){

echo "Fetching cluster credentials..."

gcloud container clusters get-credentials $CLUSTER_NAME --zone $ZONE

}

create_namespace() {

echo "Creating isolated namespace $BRANCH_SLUG inside the cluster $CLUSTER_NAME"

namespace_exists=`kubectl get namespaces | grep $BRANCH_SLUG | wc -l`

if [ "$namespace_exists" -eq 0 ]

then

echo "Namespace doesn't exists. Will create a new namespace $BRANCH_SLUG"

else

echo "The namespace already exists. Recreating..."

kubectl delete namespace $BRANCH_SLUG

fi

kubectl create namespace $BRANCH_SLUG

}

deploy_resources() {

sed -i "s*DOCKER_IMAGE*$DOCKER_IMAGE*g" k8s/deployment.yaml

if [ $CLUSTER_TYPE = "SHARED" ]; then

kubectl apply -n $BRANCH_SLUG -f k8s/*.yaml

else

kubectl apply -f k8s/*.yaml

fi

}

wait_for_ip(){

external_ip=""

while [ -z $external_ip ]; do

echo "Waiting for end point..."

if [ $CLUSTER_TYPE = "SHARED" ]; then

external_ip=$(kubectl get svc app-lb -n $BRANCH_SLUG --template="{{range .status.loadBalancer.ingress}}{{.ip}}{{end}}")

else

external_ip=$(kubectl get svc app-lb --template="{{range .status.loadBalancer.ingress}}{{.ip}}{{end}}")

fi

[ -z "$external_ip" ] && sleep 10

done

echo 'Your preview app is available at : ' && echo $external_ip

}

if [ $CLUSTER_TYPE = "SHARED" ]; then

echo "Cluster type is $CLUSTER_TYPE, assuming cluster exists and deploying the resources"

fetch_cluster_credentials

create_namespace

deploy_resources

wait_for_ip

elif [ $CLUSTER_TYPE = "ISOLATED" ]; then

echo "Cluster type is $CLUSTER_TYPE. Cluster will be created (if doesn't exist)"

create_isolated_cluster

fetch_cluster_credentials

deploy_resources

wait_for_ip

else

echo "ERR : Unknown CLUSTER_TYPE=$CLUSTER_TYPE"

fi

You can refactor the create_cluster function in this script to change the characteristics of your isolated cluster. This script takes the following parameters:

- CLUSTER_TYPE: The switch to configure a SHARED or ISOLATED cluster

- CLUSTER_NAME: Name of the cluster in case CLUSTER_TYPE is SHARED

- BRANCH_SLUG: Name of the branch

- REGION: Region in which to deploy/find the Kubernetes cluster

- ZONE: Zone in which to deploy/search for Kubernetes cluster

- PROJECT_ID: GCP project ID

- DOCKER_IMAGE: The URL of the Docker image pushed to Artifact Registry

Next is the .gitlab-ci.yml file. This file configures the CI pipeline for your repository. We configure two steps for building and deploying; these only run for commits when you raise a merge request (MR):

stages:

- build

- deploy

variables:

IMAGE_NAME: "helloworld-node"

docker-build:

# Use the official docker image.

image: docker:latest

stage: build

services:

- docker:dind

before_script:

- echo "$GOOGLE_KEY_JSON" > key.json

- cat key.json | docker login -u _json_key --password-stdin https://${REGION}-docker.pkg.dev

script:

- REGISTRY_URL="$REGION-docker.pkg.dev/$PROJECT_ID/$REPOSITORY_NAME/$IMAGE_NAME"

- DOCKER_IMAGE="${REGISTRY_URL}:${CI_COMMIT_REF_SLUG}"

- docker build -t $DOCKER_IMAGE .

- docker push $DOCKER_IMAGE

rules:

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

- if: $CI_COMMIT_BRANCH && $CI_OPEN_MERGE_REQUESTS

when: never

deploy-preview:

image: google/cloud-sdk

stage: deploy

before_script:

- echo "$GOOGLE_KEY_JSON" > key.json

- gcloud config set project $PROJECT_ID

- gcloud auth activate-service-account --key-file key.json

- gcloud auth configure-docker us-central1-docker.pkg.dev

- REGISTRY_URL="$REGION-docker.pkg.dev/$PROJECT_ID/$REPOSITORY_NAME/$IMAGE_NAME"

- DOCKER_IMAGE="${REGISTRY_URL}:${CI_COMMIT_REF_SLUG}"

- chmod 777 deploy_gke.sh

script:

- ./deploy_gke.sh ISOLATED $CI_COMMIT_REF_SLUG $CI_COMMIT_REF_SLUG $REGION $ZONE $PROJECT_ID $DOCKER_IMAGE

rules:

- if: $CI_PIPELINE_SOURCE == "merge_request_event"

- if: $CI_COMMIT_BRANCH && $CI_OPEN_MERGE_REQUESTS

when: never

You can also configure other steps, such as unit and integration tests that run on every commit. However, creating Docker images and deploying them on every commit in every branch is not really a cost-effective and scalable approach.

You only deploy the changes when an MR is raised. This allows the reviewer to go over the changes in a production-like environment before accepting your changes. The rules section in the .gitlab-ci.yml file for each step configures a step to run only when the commit is for a merge request.

The final bit in this workflow is the Kubernetes resource YAML files. You can find these in the k8s folder in the repository. For the purpose of this example, it's a simple deployment exposed via a load balancer service.

If you have Kubernetes in production, you must already have YAML resources that you can reference. You can also use your existing tooling to deploy these resources. For example, if you are using Helm charts to deploy in production, you can use the same tools in the CI pipeline by modifying the deploy_gke.sh file.

You can also deploy additional GCP resources, such as Cloud SQL and PubSub resources, by modifying the deploy_resources function in the deploy_gke.sh script.

Conclusion

Testing your application in a production-like environment—before actually releasing it to production—allows you to catch the bugs/edge cases early on in the development lifecycle. Kubernetes lets you set up ephemeral test environments via an isolated cluster for each merge request raised or via a shared cluster where each version is isolated via namespaces.

There are pros and cons to using each approach. On the one hand, you get complete isolation in isolated clusters, but they are not very cost-effective and can quickly lead to the proliferation of clusters in a somewhat large development team. However, if you work in an industry where you need isolation for compliance, then a shared cluster is simply not an option.

The shared cluster approach is best suited to scenarios where there are no compliance requirements, as it is cost-effective and utilizes the underlying hardware better.

So, if you are using Kubernetes for ephemeral environments, go ahead and choose the shared cluster approach, as long as you have no regulatory or compliance requirements.